Author: Chris Nickson

Peer reviewer: Aidan Burrell

Nuts and Bolts of EBM Part 1

This term at The Alfred ICU Journal Club we are going back to basics to examine the nuts and bolts of evidence-based medicine (EBM), rather than solely deep diving into the latest and greatest (often not the same thing!) medical literature. Thus, we are providing a series of tutorials on evidence-based medicine, critical appraisal and how to apply research in clinical practice.

First up, let’s get all ‘meta’ and consider why we even bother with Journal Club.

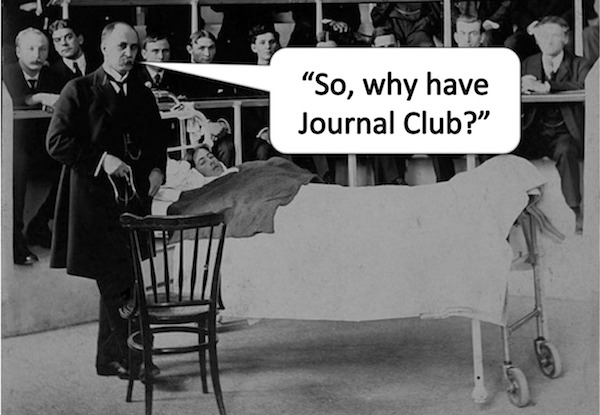

Q1. What would our practice look like if it wasn’t informed by scientific research and we didn’t think critically?

I imagine it might look a bit like this:

However, we should not be too smug.

We are kidding ourselves if we think that everything we do in intensive care is built upon an unshakeable foundation of scientific truth and certainty.

Some of what we do has more than a touch of witchcraft about it, and it is not always clear what does and what doesn’t.

Critical thinking and evidence-based medicine are our most important defenses against the dark arts!

Q2. What is ‘Evidence-based Medicine’ (EBM)?

In 1996, David Sackett and colleagues originally defined EBM as:

“the conscientious and judicious use of current best evidence from clinical research in the management of individual patients”

This means we have to think hard, reason and consider all the relevant factors. We can’t use any old evidence, only the current best evidence. We have to take the findings of clinical research, which is typically conducted upon select population samples and may be subject to methodological flaws, and carefully apply the findings to the unique person in front of us.

My friend Ken Milne, of the Skeptic’s Guide to Emergency Medicine (SGEM) fame, has added that the definition should also include ‘through shared decision making‘. This emphasises that when the medical team chooses evidence-based options for therapy, they must be in keeping with the patient’s values.

EBM is hard and can easily be corrupted into what I call ‘pseudo-EBM’ or even ‘anti-EBM’ in some cases. Here are a few examples of ‘what EBM is not’:

- “I saw a patient that…” (old school anecdote-based medicine)

- “Prof said that…” (classic eminence-based medicine)

- “I heard a podcast that…” (often a heady mix of eminence-based and so-called celebrity-based practice)

- “I read a paper that… and it concluded that…” (not all articles are created equal – is it internally valid? Is it the current best evidence available?)

- “A study with 100,000 patients can’t be wrong!” (yes it can… even if the trial is internally valid – i.e. well designed and performed – did patients like the one in front of you take part in the study?)

- “Who cares what the patient thinks, the article says we should do this” (paternalism is not part of modern EBM, we need to ensure therapies are consistent with our patient’s values)

I suspect we are all guilty of ‘anti-EBM’ and variations of ‘pseudo-EBM’ from time to time. Regardless, we should all strive to do better. Yes, EBM is hard, but that doesn’t mean we should not do it!

Q3. What is the difference between ‘internal validity’ and ‘external validity’?

The question ‘is this study valid?’ is always at the forfront of our minds when we critically appraise the medical literature. In general terms, validity is “the quality of being true or correct”, it refers to the strength of results and how accurately they reflect the real world.

Internal validity refers to the accuracy of a trial, it is the extent to which the design and conduct of the trial eliminate the possibility of bias. If a study lacks internal validity it must not be applied to any clinical setting.

On the other hand, a study that is internally valid may not be externally valid. External validity can be thought of a study’s “generalizability” or “applicability”. It is the extent to which the results of a trial provide a correct basis for generalisations to other circumstances. This is important because studies can only be confidently applied to clinical settings that are the same, or similar, to those used in the study.

Thus, an internally valid study may not be applicable to your clinical setting if your patients have different characteristics (e.g. greater illness severity, different demographics, etc) or your system of care is different (e.g. different co-interventions used).

Q4. What is meant by ‘levels of evidence’?

When we talk about ‘levels of evidence’ we are referring to how much weight we should put on the findings of clinical research. Our decision making should be swayed more by high-level evidence, than by low-level evidence. The level of evidence depends on the study design used in clinical research as well as the outcomes used. For instance, a randomised controlled trial with mortality as an outcome provides stronger evidence to guide clinical decision making that does an observational study using a surrogate marker as an endpoint.

Numerous classification schemes of levels of evidence exist and have been adopted by different organisations. In general, the higher the level of evidence, the more likely the outcome of the research is causally linked to the intervention studied and the greater its applicability to human populations.

The Australian NHMRC levels of evidence are as follows:

Level I

- Evidence obtained from a systematic review of all relevant randomised controlled trials.

Level II

- Evidence obtained from at least one properly designed randomised controlled trial.

Level III-1

- Evidence obtained from well-designed pseudo-randomised controlled trials (alternate allocation or some other method).

Level III-2

- Evidence obtained from comparative studies with concurrent controls and allocation not randomised (cohort studies), case control studies, or interrupted time series with a control group.

Level III-3

- Evidence obtained from comparative studies with historical control, two or more single-arm studies, or interrupted time series without a parallel control group.

Level IV

- Evidence obtained from case series, either post-test or pre-test and post-test.

Q5. What is ‘science-based medicine’ and what has it got to do with the ‘Tooth Fairy’?

Science-based medicine is a relatively new term, that can be thought of as a refinement of evidence-based medicine and the antidote to ‘Tooth Fairy science’.

Tooth Fairy science involves “doing research on a phenomenon before establishing that the phenomenon exists”. For instance, as Harriet Hall explains, “you could measure how much money the Tooth Fairy leaves under the pillow, whether she leaves more cash for the first or last tooth, whether the payoff is greater if you leave the tooth in a plastic baggie versus wrapped in Kleenex. You can get all kinds of good data that is reproducible and statistically significant. Yes, you have learned something. But you haven’t learned what you think you’ve learned, because you haven’t bothered to establish whether the Tooth Fairy really exists.”

Science-based medicine emphasises an often neglected component of evidence-based medicine, the consideration of prior plausibility. Regardless of what the data shows, the prior plausibility of the existence of the Tooth Fairy is vanishingly small, and the phenomena can be easily explained by more likely mechanisms. Thus, we shouldn’t consider clinical studies alone, but consider pre-clinical studies and be informed by basic sciences (although, basing clinical decisions solely on animal studies and basic lab science is certainly even more stupid!). Furthermore, because even well-designed new trials can produce unexpected results due to chance, we need to use a Bayesian approach and consider all of the other previously published studies – what is the prior probability of something being true and how does that change with the result of a new trial?

The scientific method is pretty simple. At its core, it is just an approach to problem-solving that involves forming a hypothesis, selecting one variable at a time, and then testing it. It is how we distinguish fact from fiction. However, the application of the scientific method to medicine is not so simple. This is because there are so many variables and possible confounders to consider: genetic variation, differences in demographics and comorbidities, difficulties in diagnostic criteria, differences in therapies and systems of care, and so on and so forth. This is why critical appraisal of the medical literature is so important for clinical practice.

Q6. What are the 5 Steps of evidence-based practice?

The practice of evidence-based medicine involves these 5 steps:

- Translation of uncertainty to an answerable question (ask question)

- Systematic retrieval of best evidence available (find evidence)

- Critical appraisal of evidence for validity, clinical relevance, and applicability (critically appraise)

- Application of results in practice (make clinical decisions)

- Evaluation of performance (audit)

Asking the right question is important and the PICO format is a useful way of formulating a question properly. The question should specify:

- Population/ patient characteristics

- Intervention

- Comparison or control

- Outcomes (ideally think about “patient orientated outcomes that matter” rather than surrogates)

However, a single shift in the ICU can generate so many questions that no one person can possibly pursue the answers to all questions in a rigorous fashion. That’s why guidelines are so important for promoting evidence-based practice.

Step 2, the systematic search for literature, is easily underestimated – and is best done with the help of librarian!

Step 3, the nitty gritty of critical appraisal, is something we will discuss in subsequent ‘nuts and bolts’ episodes and is the core of what do ‘week in and week out’ at The Alfred ICU Journal Club. A number of checklists have been created to help with this:

Finally, we don’t critically appraise the literature just for the sake of it. Ultimately, we need to use the information to help improve patient outcomes and to improve our performance as clinicians.

Q7. So, going back to the start, why have Journal Club?

The great Sir William Osler is believed to have established the first formalized journal club at McGill University in Montreal in 1875. The original purpose of Osler’s journal club was apparently “for the purchase and distribution of periodicals to which he could ill afford to subscribe.” This ties in nicely with my own personal FOAM ethos, but I think the expectations of the modern journal club can be taken up a notch or two.

The Alfred ICU Journal Club fulfills a number of roles:

- it provides a setting for us to learn and develop critical thinking skills and how to perform critical appraisals of the medical literature

- it helps us keep up with the current literature as well as enable to revisit the landmark clinical research on which our practice is based

- Increasingly, is serves to help generate new research ideas, and

- ultimately it must ‘close the loop’ to improve patient outcomes, through new or updated clinical guidelines and by changing how we make clinical decisions

Ultimately, Journal Club is about improving patient care.

References and links

Lifeinthefastlane.com’s Critical Care Compendium

- Animal and laboratory studies

- Critical thinking

- Evidence-based medicine

- Medical reversal

- Levels and Grades of Evidence

- Validity of Clinical Research

Other sources

- Milne, K. Teaching EBM So It Doesn’t Suck [Internet]. Iteachem.net. 2016 [cited 16 May 2016]. Available from: http://iteachem.net/2016/02/teaching-ebm-doesnt-suck/

- Nickson, CP and Carley, S. How to make Journal Club Work [Internet]. Iteachem.net. 2016 [cited 16 May 2016] Available from: http://iteachem.net/2014/01/make-journal-club-work/

- Novella, S. It’s Time for Science-Based Medicine – CSI [Internet]. Csicop.org. 2016 [cited 16 May 2016]. Available from: http://www.csicop.org/si/show/its_time_for_science-based_medicine

- Tooth Fairy science – The Skeptic’s Dictionary – Skepdic.com [Internet]. Skepdic.com. 2016 [cited 16 May 2016]. Available from: http://skepdic.com/toothfairyscience.html

Pingback: Global Intensive Care | Why Journal Club?… EBM versus the Tooth Fairy